Architectures¶

Autoencoder¶

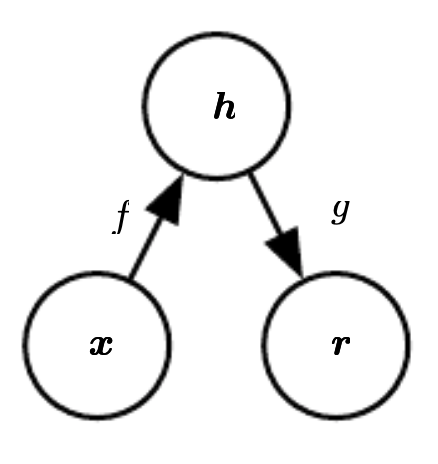

An autoencoder is a type of feedforward neural network that attempts to copy its input to its output. Internally, it has a hidden layer, h, that describes a code, used to represent the input. The network consists of two parts:

- An encoder function: \(h = f(x)\).

- A decoder function, that produces a reconstruction: \(r = g(h)\).

The figure below shows the presented architecture.

Source [6]

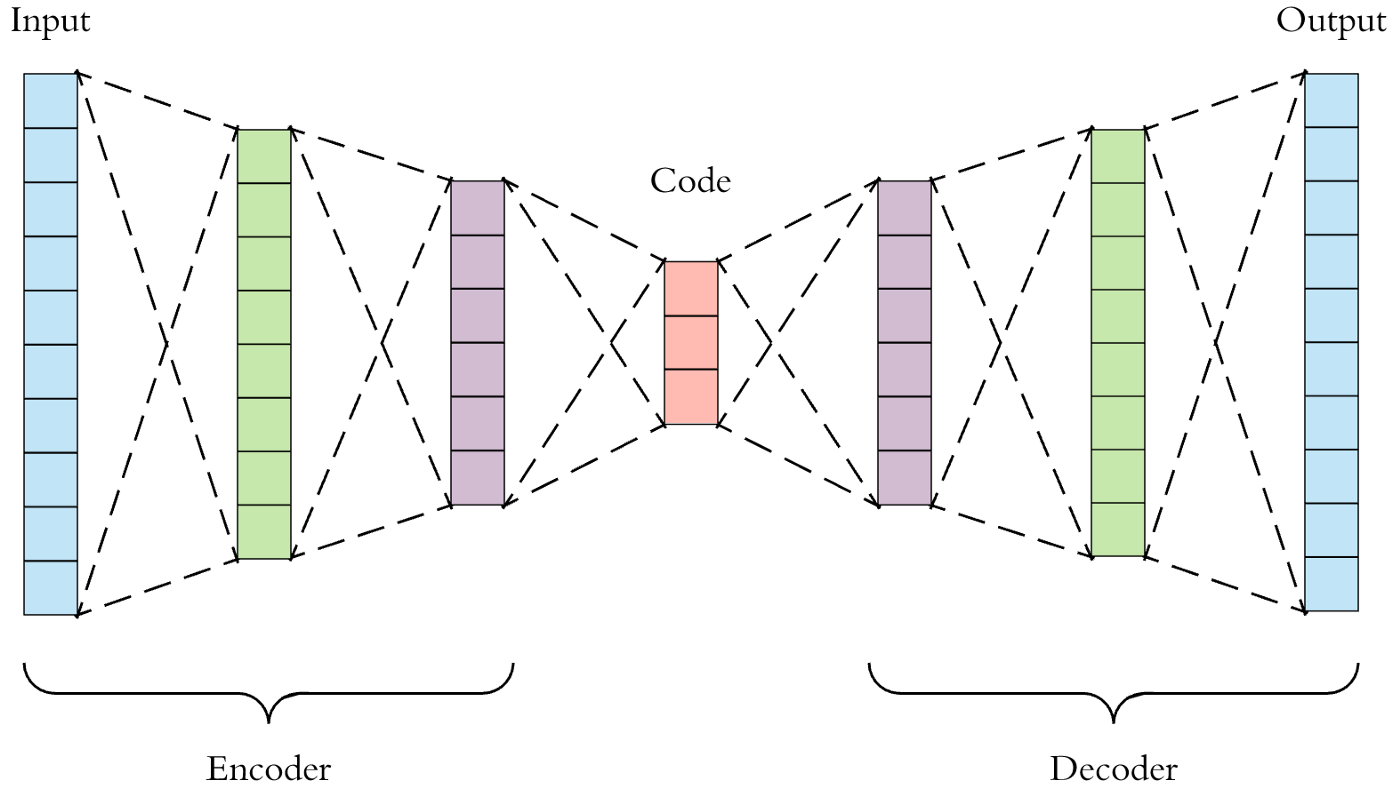

The autoencoder compresses the input into a lower-dimensional code, and then it reconstructs the output from this representation. The code is a compact “summary”, or “compression”, of the input, and it is also called the latent-space representation.

If an autoencoder simply learned to set \(g(f(x))=x\) everywhere, then it would not be very useful; instead, autoencoders are designed to be unable to learn to copy perfectly. They are restricted in ways that allow them to copy only approximately, and to copy only input that resembles the training data. Because the model is forced to prioritize which aspects of the input to copy, it learns useful properties of the data.

In order to build an autoencoder, three things are needed: an encoding method, a decoding method, and a loss function to compare the output with the target.

Both the encoder and the decoder are fully-connected feedforward neural networks. The code is a single layer of an artificial neural network, with the dimensionality of our choice. The number of nodes in the code layer (the code size) is a hyperparameter to be set before training the autoencoder.

The figure below shows the autoencoder architecture. First, the input passes through the encoder, which is a fully-connected neural network, in order to produce the code. The decoder, which has the similar neural network structure, then produces the output by using the code only. The aim is to get an output identical to the input.

Source [5]

Traditionally, autoencoders were used for dimensionality reduction or feature learning. More recently, theoretical connections between autoencoders and latent variable models have brought autoencoders to the forefront of generative modeling. As a compression method, autoencoders do not perform better than their alternatives. And the fact that autoencoders are data-specific makes them impractical as a general technique.

In general, autoencoders have three common use cases:

- Data denoising: It should be noted that denoising autoencoders are not meant to automatically denoise an image, instead they were invented to help the hidden layers of the autoencoder learn more robust filters, and reduce the the risk of overfitting.

- Dimensionality reduction: Visualizing high-dimensional data is challenging. t-SNE [7] is the most commonly used method, but struggles with large number of dimensions (typically above 32). Therefore, autoencoders can be used as a preprocessing step to reduce the dimensionality, and this compressed representation is used by t-SNE to visualize the data in 2D space.

- Variational Autoencoders (VAE): this is a more modern and complex use-case of autoencoders. VAE learns the parameters of the probability distribution modeling the input data, instead of learning an arbitrary function in the case of vanilla autoencoders. By sampling points from this distribution we can also use the VAE as a generative model [8].

Model

An example implementation in PyTorch.

class Autoencoder(nn.Module):

def __init__(self, in_shape):

super().__init__()

c,h,w = in_shape

self.encoder = nn.Sequential(

nn.Linear(c*h*w, 128),

nn.ReLU(),

nn.Linear(128, 64),

nn.ReLU(),

nn.Linear(64, 12),

nn.ReLU()

)

self.decoder = nn.Sequential(

nn.Linear(12, 64),

nn.ReLU(),

nn.Linear(64, 128),

nn.ReLU(),

nn.Linear(128, c*h*w),

nn.Sigmoid()

)

def forward(self, x):

bs,c,h,w = x.size()

x = x.view(bs, -1)

x = self.encoder(x)

x = self.decoder(x)

x = x.view(bs, c, h, w)

return x

Training

def train(net, loader, loss_func, optimizer):

net.train()

for inputs, _ in loader:

inputs = Variable(inputs)

output = net(inputs)

loss = loss_func(output, inputs)

optimizer.zero_grad()

loss.backward()

optimizer.step()

Further reading

CNN¶

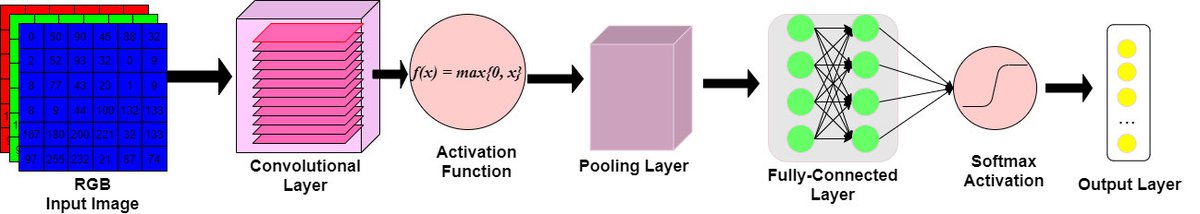

The convolutional neural network, or CNN, is a feed-forward neural network which has at least one convolutional layer. This type of deep neural network is used for processing structured arrays of data. It is distinguished from other neural networks by its superior performance with speech, audio, and especially, image data. For the latter data type, CNNs are commonly employed in computer vision tasks, like image classification, since they are especially good at finding out patterns from the input images, such as lines, circles, or more complex objects, e.g., human faces.

Convolutional neural networks comprise many convolutional layers, stacked one on top of the other, in a sequence. The sequential architecture of CNNs allows them to learn hierarchical features. Every layer can recognize shapes, and the deeper the network goes, the more complex are the shapes which can be recognized. The design of convolutional layers in a CNN reflects the structure of the human visual cortex. In fact, our visual cortex is similarly made of different layers, which process an image in our sight by sequentially identifying more and more complex features.

The CNN architecture is made up of three main distinct layers:

- Convolutional layer

- Pooling layer

- Fully-connected (FC) layer

Overview of CNN architecture. The architecture of CNNs follows this structure, but with a greater number of layers for each layer’s type. The convolutional and pooling layers are layers peculiar to CNNs, while the fully-connected layer, activation function and output layer, are also present in regular feed-forward neural networks. Source: [2]

When working with image data, the CNN architecture accepts as input a 3D volume, or a 1D vector depending if the image data is in RGB format, for the first case, or in grayscale format, for the latter. Then it transforms the input through different equations, and it outputs a class. The convolutional layer is the first layer of the convolutional neural network. While this first layer can be followed by more convolutional layers, or pooling layers, the fully-connected layer remains the last layer of the network, which outputs the result. At every subsequent convolutional layer, the CNN increases its complexity, and it can identify greater portions in the image. In the first layers, the algorithm can recognize simpler features such as color or edges. Deeper in the network, it becomes able to identify both larger objects in the image and more complex ones. In the last layers, before the image reaches the final FC layer, the CNN identifies the full object in the image.

Model

An example implementation of a CNN in PyTorch.

Training

Further reading

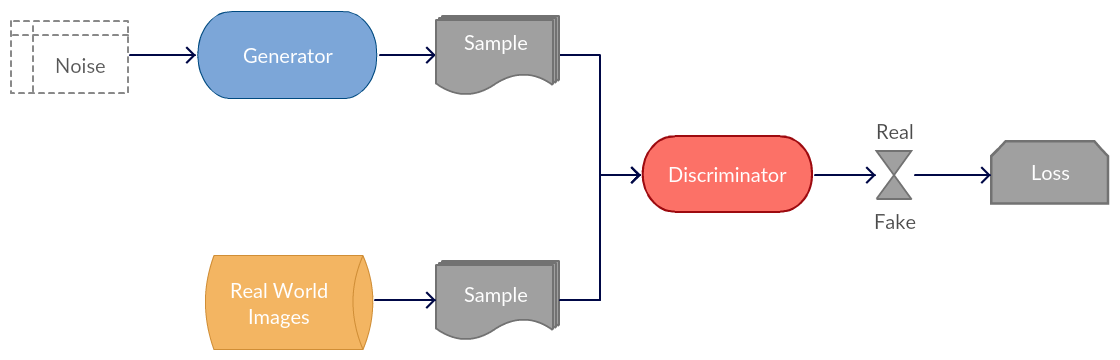

GAN¶

A Generative Adversarial Network (GAN) is a type of network which creates novel tensors (often images, voices, etc.). The generative portion of the architecture competes with the discriminator part of the architecture in a zero-sum game. The goal of the generative network is to create novel tensors which the adversarial network attempts to classify as real or fake. The goal of the generative network is generate tensors where the discriminator network determines that the tensor has a 50% chance of being fake and a 50% chance of being real.

Figure from [3].

Model

An example implementation in PyTorch.

Generator

class Generator(nn.Module):

def __init__(self):

super()

self.net = nn.Sequential(

nn.ConvTranspose2d( 200, 32 * 8, 4, 1, 0, bias=False),

nn.BatchNorm2d(32 * 8),

nn.ReLU(),

nn.ConvTranspose2d(32 * 8, 32 * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(32 * 4),

nn.ReLU(),

nn.ConvTranspose2d( 32 * 4, 32 * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(32 * 2),

nn.ReLU(),

nn.ConvTranspose2d( 32 * 2, 32, 4, 2, 1, bias=False),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.ConvTranspose2d( 32, 1, 4, 2, 1, bias=False),

nn.Tanh()

)

def forward(self, tens):

return self.net(tens)

Discriminator

class Discriminator(nn.Module):

def __init__(self):

super()

self.net = nn.Sequential(

nn.Conv2d(1, 32, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2),

nn.Conv2d(32, 32 * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(32 * 2),

nn.LeakyReLU(0.2),

nn.Conv2d(32 * 2, 32 * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(32 * 4),

nn.LeakyReLU(0.2),

# state size. (32*4) x 8 x 8

nn.Conv2d(32 * 4, 32 * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(32 * 8),

nn.LeakyReLU(0.2),

# state size. (32*8) x 4 x 4

nn.Conv2d(32 * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, tens):

return self.net(tens)

Training

def train(netD, netG, loader, loss_func, optimizerD, optimizerG, num_epochs):

netD.train()

netG.train()

device = "cuda:0" if torch.cuda.is_available() else "cpu"

for epoch in range(num_epochs):

for i, data in enumerate(loader, 0):

netD.zero_grad()

realtens = data[0].to(device)

b_size = realtens.size(0)

label = torch.full((b_size,), 1, dtype=torch.float, device=device) # gen labels

output = netD(realtens)

errD_real = loss_func(output, label)

errD_real.backward() # backprop discriminator fake and real based on label

noise = torch.randn(b_size, 200, 1, 1, device=device)

fake = netG(noise)

label.fill_(0)

output = netD(fake.detach()).view(-1)

errD_fake = loss_func(output, label)

errD_fake.backward() # backprop discriminator fake and real based on label

errD = errD_real + errD_fake # discriminator error

optimizerD.step()

netG.zero_grad()

label.fill_(1)

output = netD(fake).view(-1)

errG = loss_func(output, label) # generator error

errG.backward()

optimizerG.step()

Further reading

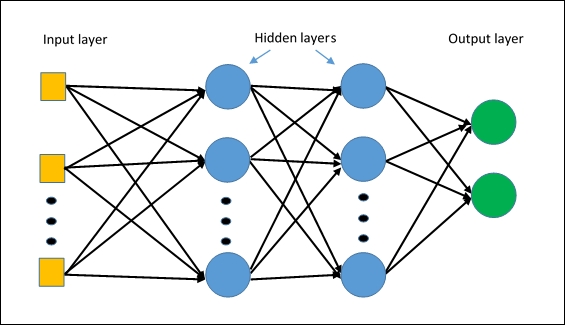

MLP¶

A Multi Layer Perceptron (MLP) is a neural network with only fully connected layers. Figure from [5].

Model

An example implementation on FMNIST dataset in PyTorch. Full Code

- The input to the network is a vector of size 28*28 i.e.(image from FashionMNIST dataset of dimension 28*28 pixels flattened to sigle dimension vector).

- 2 fully connected hidden layers.

- Output layer with 10 outputs.(10 classes)

class MLP(nn.Module):

def __init__(self):

super(MLP,self).__init__()

# define layers

self.fc1 = nn.Linear(in_features=28*28, out_features=500)

self.fc2 = nn.Linear(in_features=500, out_features=200)

self.fc3 = nn.Linear(in_features=200, out_features=100)

self.out = nn.Linear(in_features=100, out_features=10)

def forward(self, t):

# fc1 make input 1 dimentional

t = t.view(-1,28*28)

t = self.fc1(t)

t = F.relu(t)

# fc2

t = self.fc2(t)

t = F.relu(t)

# fc3

t = self.fc3(t)

t = F.relu(t)

# output

t = self.out(t)

return t

Training

def train(net, loader, loss_func, optimizer):

net.train()

n_batches = len(loader)

for inputs, targets in loader:

inputs = Variable(inputs)

targets = Variable(targets)

output = net(inputs)

loss = loss_func(output, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# print statistics

running_loss = loss.item()

print('Training loss: %.3f' %( running_loss))

Evaluating

def main():

train_set = torchvision.datasets.FashionMNIST(

root = './FMNIST',

train = True,

download = False,

transform = transforms.Compose([

transforms.ToTensor()

])

)

mlp = MLP()

loader = torch.utils.data.DataLoader(train_set, batch_size = 1000)

optimizer = optim.Adam(mlp.parameters(), lr=0.01)

loss_func=nn.CrossEntropyLoss()

for i in range(0,15):

train(mlp,loader,loss_func,optimizer)

print("Finished Training")

torch.save(mlp.state_dict(), "./mlpmodel.pt")

test_set = torchvision.datasets.FashionMNIST(

root = './FMNIST',

train = False,

download = False,

transform = transforms.Compose([

transforms.ToTensor()

])

)

testloader = torch.utils.data.DataLoader(test_set, batch_size=4)

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = mlp(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (

100 * correct / total))

Further reading

TODO

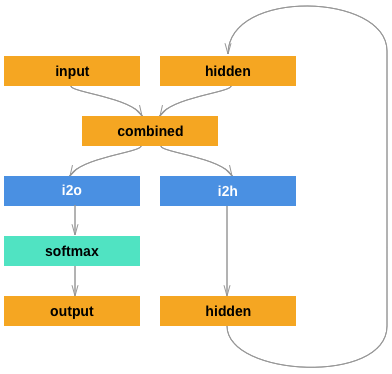

RNN¶

Description of RNN use case and basic architecture.

Model

class RNN(nn.Module):

def __init__(self, n_classes):

super().__init__()

self.hid_fc = nn.Linear(185, 128)

self.out_fc = nn.Linear(185, n_classes)

self.softmax = nn.LogSoftmax()

def forward(self, inputs, hidden):

inputs = inputs.view(1,-1)

combined = torch.cat([inputs, hidden], dim=1)

hid_out = self.hid_fc(combined)

out = self.out_fc(combined)

out = self.softmax(out)

return out, hid_out

Training

In this example, our input is a list of last names, where each name is a variable length array of one-hot encoded characters. Our target is is a list of indices representing the class (language) of the name.

- For each input name..

- Initialize the hidden vector

- Loop through the characters and predict the class

- Pass the final character’s prediction to the loss function

- Backprop and update the weights

def train(model, inputs, targets):

for i in range(len(inputs)):

target = Variable(targets[i])

name = inputs[i]

hidden = Variable(torch.zeros(1,128))

model.zero_grad()

for char in name:

input_ = Variable(torch.FloatTensor(char))

pred, hidden = model(input_, hidden)

loss = criterion(pred, target)

loss.backward()

for p in model.parameters():

p.data.add_(-.001, p.grad.data)

Further reading

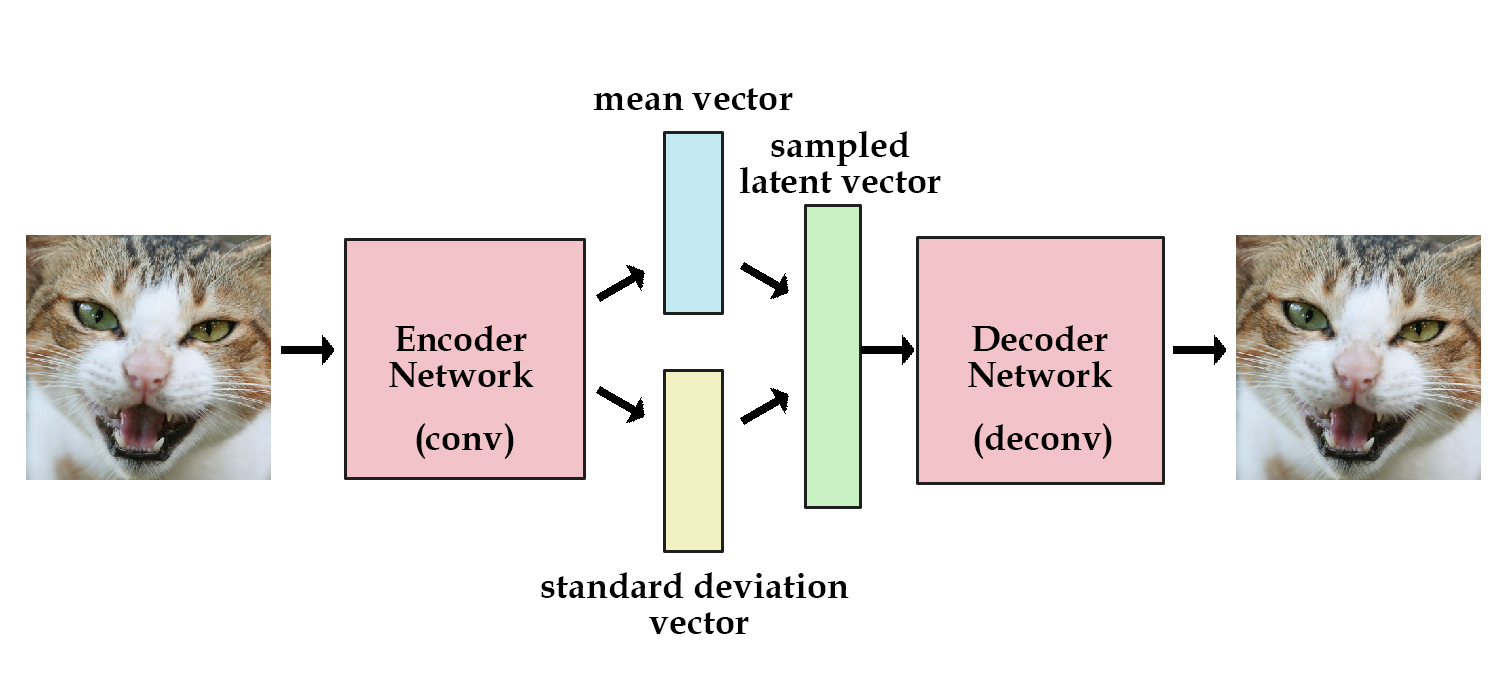

VAE¶

Autoencoders can encode an input image to a latent vector and decode it, but they can’t generate novel images. Variational Autoencoders (VAE) solve this problem by adding a constraint: the latent vector representation should model a unit gaussian distribution. The Encoder returns the mean and variance of the learned gaussian. To generate a new image, we pass a new mean and variance to the Decoder. In other words, we “sample a latent vector” from the gaussian and pass it to the Decoder. It also improves network generalization and avoids memorization. Figure from [4].

Loss Function

The VAE loss function combines reconstruction loss (e.g. Cross Entropy, MSE) with KL divergence.

def vae_loss(output, input, mean, logvar, loss_func):

recon_loss = loss_func(output, input)

kl_loss = torch.mean(0.5 * torch.sum(

torch.exp(logvar) + mean**2 - 1. - logvar, 1))

return recon_loss + kl_loss

Model

An example implementation in PyTorch of a Convolutional Variational Autoencoder.

class VAE(nn.Module):

def __init__(self, in_shape, n_latent):

super().__init__()

self.in_shape = in_shape

self.n_latent = n_latent

c,h,w = in_shape

self.z_dim = h//2**2 # receptive field downsampled 2 times

self.encoder = nn.Sequential(

nn.BatchNorm2d(c),

nn.Conv2d(c, 32, kernel_size=4, stride=2, padding=1), # 32, 16, 16

nn.BatchNorm2d(32),

nn.LeakyReLU(),

nn.Conv2d(32, 64, kernel_size=4, stride=2, padding=1), # 32, 8, 8

nn.BatchNorm2d(64),

nn.LeakyReLU(),

)

self.z_mean = nn.Linear(64 * self.z_dim**2, n_latent)

self.z_var = nn.Linear(64 * self.z_dim**2, n_latent)

self.z_develop = nn.Linear(n_latent, 64 * self.z_dim**2)

self.decoder = nn.Sequential(

nn.ConvTranspose2d(64, 32, kernel_size=3, stride=2, padding=0),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.ConvTranspose2d(32, 1, kernel_size=3, stride=2, padding=1),

CenterCrop(h,w),

nn.Sigmoid()

)

def sample_z(self, mean, logvar):

stddev = torch.exp(0.5 * logvar)

noise = Variable(torch.randn(stddev.size()))

return (noise * stddev) + mean

def encode(self, x):

x = self.encoder(x)

x = x.view(x.size(0), -1)

mean = self.z_mean(x)

var = self.z_var(x)

return mean, var

def decode(self, z):

out = self.z_develop(z)

out = out.view(z.size(0), 64, self.z_dim, self.z_dim)

out = self.decoder(out)

return out

def forward(self, x):

mean, logvar = self.encode(x)

z = self.sample_z(mean, logvar)

out = self.decode(z)

return out, mean, logvar

Training

def train(model, loader, loss_func, optimizer):

model.train()

for inputs, _ in loader:

inputs = Variable(inputs)

output, mean, logvar = model(inputs)

loss = vae_loss(output, inputs, mean, logvar, loss_func)

optimizer.zero_grad()

loss.backward()

optimizer.step()

Further reading

References

| [1] | https://hackernoon.com/autoencoders-deep-learning-bits-1-11731e200694 |

| [2] | https://iq.opengenus.org/basics-of-machine-learning-image-classification-techniques/ |

| [3] | http://guertl.me/post/162759264070/generative-adversarial-networks |

| [4] | http://kvfrans.com/variational-autoencoders-explained |

| [5] | `Applied Deep Learning - Part 3: Autoencoders |

<https://towardsdatascience .com/applied-deep-learning-part-3-autoencoders-1c083af4d798/>`__

| [6] | `Deep Learning Book - Autoencoders <https://www.deeplearningbook |

.org/contents/autoencoders.html/>`__

| [7] | t-SNE |

| [8] | VAE |